Cleared Hot is a nostalgic helicopter shooter with satisfying physics and light tactical elements.

Rain Hell From Above

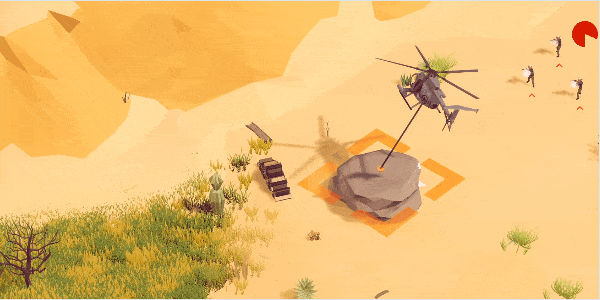

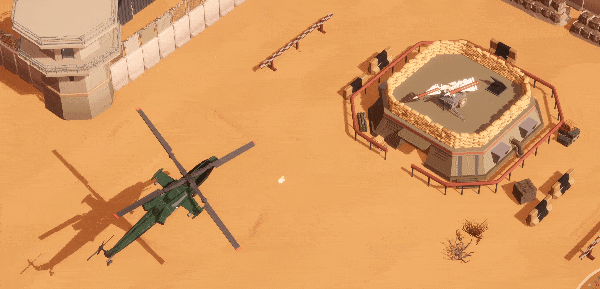

CLEARED HOT is a nostalgic helicopter shooter with satisfying physics and light tactical elements. Shoot, dodge, move your squad around the battlefield, and pick up almost anything with your rope and magnet.

Hot Physics Action

With fully physics-based action, you can get creative and use the environment to your advantage. Send enemies ragdolling through the air with your rockets, or use your rope to throw rocks at the enemy.

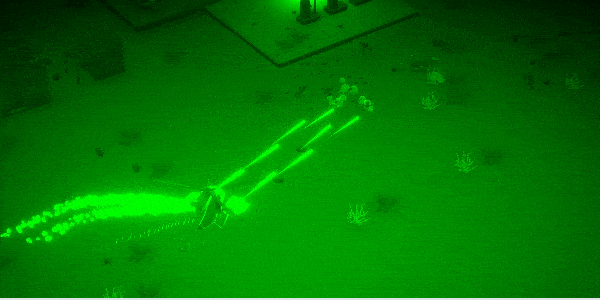

Night Missions

The action is even hotter at night. Fly under the cover of darkness, and utilize your night vision or thermal goggles to get the jump on the enemy.

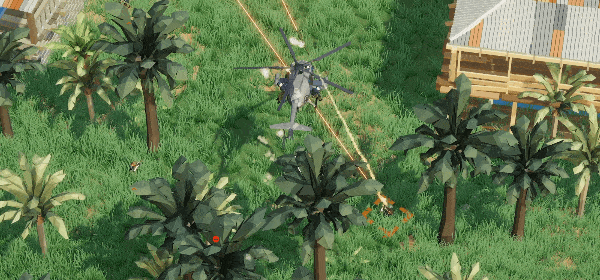

Protect Your Squad

Transport your squad to take enemy territory, or position them to defend your own base. Deploy them wisely to complete your objectives, and try not to drop too many along the way.

Get Creative

Haul a**…and just about anything else. Redirect heat seeking missiles. Carry a tank to help you assault an enemy base. Pick up enemies and send them flying to their death. You can pick up anything that's not tied down, so get creative.

Features

Fully physics based: pick up vehicles, enemies, or redirect heat seeking missiles. Discover emergent gameplay. Wide range of physics based enemy vehicles: tanks, jeeps, technicals, anti-air turrets, boats, and fighter jets

Full single player campaign

Customizable loadouts

Day and night missions

Buy and upgrade helicopters

Desert, Jungle, Arctic biomes

Latest News

Stay informed with updates on our studio and the games we're creating.

Gifts From Above

FAQ

SUBSCRIBE, THAT's AN ORDER!

Welcome to our game studio, where creativity meets innovation. Dive into immersive worlds and unforgettable adventures crafted just for you.